First-order logic

From Wikipedia, the free encyclopedia

First-order logic (FOL) is a system of mathematical logic, extending propositional logic (equivalently, sentential logic), which is in turn extended by second-order logic. It is also called first-order predicate calculus (FOPC).

The atomic sentences of first-order logic have the form P(t1, ..., tn) (a predicate with one or more "subjects") rather than being propositional letters as in propositional logic. This is usually written without parentheses or commas, as below.

The new ingredient of first-order logic not found in propositional logic is quantification: where φ is any sentence, the new constructions ∀x φ and ∃x φ, read "for all x, φ" and "for some x, φ", are introduced. For convenience in explaining our intentions, we write φ as φ(x) and let φ(a) represent the result of replacing all (free) occurrences of x in φ(x) with a, then ∀x φ(x) means that φ(a) is true for any value of a and ∃x φ(x) means that there is an a such that φ(a) is true. Values of the variables are taken from an understood universe of discourse; a refinement of first-order logic allows variables ranging over different sorts of object.

First-order logic has sufficient expressive power for the formalization of virtually all of mathematics. A first-order theory consists of a set of axioms (usually finite or recursively enumerable) and the statements deducible from them. The usual set theory ZFC is an example of a first-order theory, and it is generally accepted that all of classical mathematics can be formalized in ZFC. There are other theories that are commonly formalized independently in first-order logic (though they do admit implementation in set theory) such as Peano arithmetic.

Contents |

Defining first-order logic

A predicate calculus consists of

- formation rules (i.e. recursive definitions for forming well-formed formulas).

- transformation rules (i.e. inference rules for deriving theorems).

- a (possibly countably infinite) set of axioms or axiom schemata.

The axioms considered here are the logical axioms which are part of the predicate calculus. Further, non-logical axioms are added in specific first-order theories: these are not regarded as truths of logic but as truths of the particular theory under consideration.

When the set of axioms is infinite, it is required that there is an algorithm which can decide for a given well-formed formula whether it is an axiom or not. Furthermore, there should be an algorithm which can decide whether a given application of an inference rule is correct or not.

It is important to note that the predicate calculus can be formalized in many equivalent ways; there is nothing canonical about the axioms and rules of inference given here, but any formalization will yield the same theorems of logic (and deduce the same theorems from any set of non-logical axioms).

Vocabulary

The "vocabulary" is composed of

- A set of predicate variables (or relations) each with some valence (or arity) ≥1, which are often denoted by uppercase letters P, Q, R,...

- A set of constants, often denoted by lowercase letters a, b, c,... .

- A set of functions, each of some valence ≥ 1, which are often denoted by lowercase letters f, g, h,... .

- An infinite set of variables, often denoted by lowercase letters x, y, z,... .

- Symbols denoting logical operators (or connectives): ¬ (logical not), ∧ (logical and), ∨ (logical or), → (logical conditional), ↔ (logical biconditional).

- Symbols denoting quantifiers: ∀ (universal quantification), ∃ (existential quantification).

- Left and right parenthesis.

- An identity or equality symbol = is sometimes but not always included in the vocabulary.

There are several minor variations listed below:

- The set of primitive symbols (operators and quantifiers) often varies. Some symbols may be omitted as primitive and taken as abbreviations instead; e.g. (P ↔ Q) is an abbreviation for (P → Q) ∧ (Q → P). On the other hand, it is possible to include other operators as primitive, such as the truth constants ⊤ for "true" and ⊥ for "false" (these are operators of valence 0), or the Sheffer stroke (P | Q). The minimum number of primitive symbols needed is one, but if we restrict ourselves to the operators listed above, we need three; for example, ¬, ∧, and ∀ suffice.

- Some older books and papers use the notation φ ⊃ ψ for φ → ψ, ~φ for ¬φ, φ & ψ for φ ∧ ψ, and a wealth of notations for quantifiers; e.g., ∀x φ may be written as (x)φ.

- Equality is sometimes considered to be a part of first order logic; if it is, then the equality symbol is included in the vocabulary, and behaves syntactically as a binary predicate. This case is sometimes called first order logic with equality.

- Constants are really the same as functions of valence 0, so it would be possible and convenient to omit constants and allow functions to have any valence. But it is traditional to use the term "function" only for functions of valence at least 1.

- In the definition above relations must have valence at least 1. It is possible to allow relations of valence 0; these could be considered as propositional variables.

- There are many different conventions about where to put parentheses; for example, one might write ∀x or (∀x). Sometimes one uses colons or full stops instead of parentheses to make formulas unambiguous. One interesting but rather unusual convention is "Polish notation", where one omits all parentheses, and writes ∧, ∨, and so on in front of their arguments rather than between them. Polish notation is compact and elegant, but rare because it is hard for humans to read it.

- A technical observation (of which one can make what one will, philosophically) is that if there is a function symbol of arity 2 representing an ordered pair (or predicate symbols of arity 2 representing the projection relations of an ordered pair) then one can dispense entirely with functions or predicates of arity > 2. Of course the pair or projections need to satisfy the natural axioms.

The sets of constants, functions, and relations are usually considered to form a language, while the variables, logical operators, and quantifiers are usually considered to belong to the logic. For example, the language of group theory consists of one constant (the identity element), one function of valence 1 (the inverse) one function of valence 2 (the product), and one relation of valence 2 (equality), which would be omitted by authors who include equality in the underlying logic.

Formation rules

The formation rules define the terms, formulas, and the free variables in them as follows.

The set of terms is recursively defined by the following rules:

- Any constant is a term (with no free variables).

- Any variable is a term (whose only free variable is itself).

- Any expression f(t1,...,tn) of n ≥ 1 arguments (where each argument ti is a term and f is a function symbol of valence n) is a term. Its free variables are the free variables of any of the terms ti.

- Closure clause: Nothing else is a term.

The set of well-formed formulas (usually called wffs or just formulas) is recursively defined by the following rules:

- Simple and complex predicates If P is a relation of valence n ≥ 1 and the ai are terms then P(a1,...,an) is well-formed. Its free variables are the free variables of any of the terms ai. If equality is considered part of logic, then (a1 = a2) is well formed. All such formulas are said to be atomic.

- Inductive Clause I: If φ is a wff, then ¬φ is a wff. Its free variables are the free variables of φ.

- Inductive Clause II: If φ and ψ are wffs, then (φ ∧ ψ), (φ ∨ ψ), (φ → ψ), (φ ↔ ψ) are wffs. Its free variables are the free variables of φ or ψ.

- Inductive Clause III: If φ is a wff, then ∀x φ and ∃x φ are wffs (and similarly for any other variable in place of x). Its free variables are the free variables of φ or ψ other than x. (Any instance of x (or other variable replacing x in this construction) is said to be bound — not free — in ∀x φ and ∃x φ .)

- Closure Clause: Nothing else is a wff.

In practice, if P is a relation of valence 2, we often write "a P b" instead of "P a b"; for example, we write 1 < 2 instead of <(1 2). Similarly if f is a function of valence 2, we sometimes write "a f b" instead of "f(a b)"; for example, we write 1 + 2 instead of +(1 2). It is also common to omit some parentheses if this does not lead to ambiguity.

Sometimes it is useful to say that "P(x) holds for exactly one x", which can be expressed as ∃!x P(x). This can also be expressed as ∃x (P(x) ∧ ∀y (P(y) → (x = y))) .

Examples: The language of ordered abelian groups has one constant 0, one unary function −, one binary function +, and one binary relation ≤. So

- 0, x, y are atomic terms

- +(x, y), +(x, +(y, −(z))) are terms, usually written as x + y, x + (y + (−z))

- =(+(x, y), 0), ≤(+(x, +(y, −(z))), +(x, y)) are atomic formulas, usually written as x + y = 0, x + y - z ≤ x + y,

- (∀x ∃y ≤( +(x, y), z)) ∧ (∃x =(+(x, y), 0)) is a formula, usually written as (∀x ∃y x + y ≤ z) ∧ (∃x x + y = 0).

Substitution

If t is a term and φ(x) is a formula possibly containing x as a free variable, then φ(t) is defined to be the result of replacing all free instances of x by t, provided that no free variable of t becomes bound in this process. If some free variable of t becomes bound, then to substitute t for x it is first necessary to change the names of bound variables of φ to something other than the free variables of t. To see why this condition is necessary, consider the formula φ(x) given by ∀y y ≤ x ("x is maximal"). If t is a term without y as a free variable, then φ(t) just means t is maximal. However if t is y the formula φ(y) is ∀y y ≤ y which does not say that y is maximal. The problem is that the free variable y of t (=y) became bound when we substituted y for x in φ(x). So to form φ(y) we must first change the bound variable y of φ to something else, say z, so that φ(y) is then ∀z z ≤ y. Forgetting this condition is a notorious cause of errors.

Equality

There are several different conventions for using equality (or identity) in first order logic. This section summarizes the main ones. The various conventions all give essentially the same results with about the same amount of work, and differ mainly in terminology.

- The most common convention for equality is to include the equality symbol as a primitive logical symbol, and add the axioms for equality to the axioms for first order logic. The equality axioms are

-

- x = x

- x = y → f(...,x,...) = f(...,y,...) for any function f

- x = y → (P(...,x,...) → P(...,y,...)) for any relation P (including = itself)

- x = y → f(...,x,...) = f(...,y,...) for any function f

- x = x

- The next most common convention is to include the equality symbol as one of the relations of a theory, and add the equality axioms to the axioms of the theory. In practice this is almost indistinguishable from the previous convention, except in the unusual case of theories with no notion of equality. The axioms are the same, and the only difference is whether one calls some of them logical axioms or axioms of the theory.

- In theories with no functions and a finite number of relations, it is possible to define equality in terms of the relations, by defining the two terms s and t to be equal if any relation is unchanged by changing s to t in any argument. For example, in set theory with one relation ∈, we would define s = t to be an abbreviation for ∀x (s ∈ x ↔ t ∈ x) ∧ ∀x (x ∈ s ↔ x ∈ t). This definition of equality then automatically satisfies the axioms for equality.

- In some theories it is possible to give ad hoc definitions of equality. For example, in a theory of partial orders with one relation ≤ we could define s = t to be an abbreviation for s ≤ t ∧ t ≤ s.

Inference rules

The inference rule modus ponens is the only one required from propositional logic for the formalization given here. It states that if φ and φ → ψ are both proved, then one can deduce ψ.

The inference rule called Universal Generalization is characteristic of the predicate calculus. It can be stated as

- if

,

then

,

then

where φ is supposed to stand for an already-proven theorem of predicate calculus.

Notice that Generalization is analogous to the Necessitation Rule of modal logic, which is

- if

,

then

,

then  .

.

Quantifier axioms

The following four logical axioms characterize a predicate calculus:

- PRED-1: (∀x Z(x)) → Z(t)

- PRED-2: Z(t) → (∃x Z(x))

- PRED-3: (∀x (W → Z(x))) → (W → ∀x Z(x))

- PRED-4: (∀x (Z(x) → W)) → (∃x Z(x) → W)

These are actually axiom schemata: the expression W stands for any wff in which x is not free, and the expression Z(x) stands for any wff with the additional convention that Z(t) stands for the result of substitution of the term t for x in Z(x).

Predicate calculus

The predicate calculus is an extension of the propositional calculus that defines which statements of first order logic are provable. It is a formal system used to describe mathematical theories. If the propositional calculus is defined with a suitable set of axioms and the single rule of inference modus ponens (this can be done in many different ways), then the predicate calculus can be defined by appending some additional axioms and the additional inference rule "universal generalization". More precisely, as axioms for the predicate calculus we take:

- All tautologies from the propositional calculus (with the proposition variables replaced by formulas).

- The axioms for quantifiers, given above.

- The axioms for equality given above, if equality is regarded as a logical concept.

A sentence is defined to be provable in first order logic if it can be obtained by starting with the axioms of the predicate calculus and repeatedly applying the inference rules "modus ponens" and "universal generalization".

If we have a theory T (a set of statements, called axioms, in some language) then a sentence φ is defined to be provable in the theory T if a ∧ b ∧ ... → φ is provable in first order logic, for some finite set of axioms a, b,... of the theory T.

One apparent problem with this definition of provability is that it seems rather ad hoc: we have taken some apparently random collection of axioms and rules of inference, and it is far from clear that we have not accidently missed out some vital axiom or rule. Gödel's completeness theorem assures us that this is not really a problem: the theorem states that any statement true in all models is provable in first order logic. In particular, any reasonable definition of "provable" in first order logic must be equivalent to the one above (though it is possible for the lengths of proofs to differ vastly for different definitions of provability).

There are many different (but equivalent) ways to define provability. The definition above is a typical example of a "Hilbert style" calculus, which has a lot of different axioms but very few rules of inference. The "Gentzen style" predicate calculus differs in that it has very few axioms but many rules of inference.

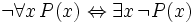

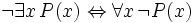

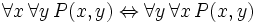

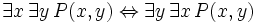

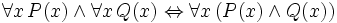

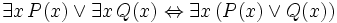

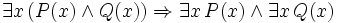

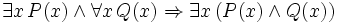

Identities

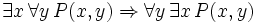

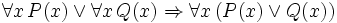

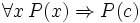

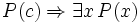

Inference rules

(If

c is a variable, then it must not already be quantified somewhere in

P(x))

(If

c is a variable, then it must not already be quantified somewhere in

P(x))

(x must not appear free in P(c))

(x must not appear free in P(c))

Metalogical theorems of first-order logic

Some important metalogical theorems are listed below in bulleted form.

- Unlike the propositional logic, first-order logic is undecidable, provided that the language has at least one predicate of valence at least 2. There is provably no decision procedure for determining for an arbitrary formula P, whether P is valid (see Halting problem). (Results came independently from Church and Turing.)

- The decision problem for validity is semidecidable; in other words, there is a Turing machine that when given any sentence as input, will halt if and only if the sentence is valid (true in all models). As Gödel's completeness theorem shows, for any valid formula P, P is provable.

- Monadic predicate logic (i.e., predicate logic with only predicates of one argument) is decidable.

Comparison with other logics

- Typed first order logic allows variables and terms to have various types (or sorts). If there are only a finite number of types, this does not really differ much from first order logic, because one can describe the types with a finite number of unary predicates and a few axioms. Sometimes there is a special type Ω of truth values, in which case formulas are just terms of type Ω.

- Weak second order logic allows quantification over finite subsets.

- Monadic second order logic allows quantification over subsets, or in other words over unary predicates.

- Second order logic allows quantification over subsets and relations, or in other words over all predicates. For example, equality can be defined in second-order logic by x = y ≡def ∀P (P(x) ↔ P(y)). Quantification over predicates is not permitted in first-order logic.

- Higher order logics allows quantification over more general things, such as relations between relations.

- Intuitionistic first order logic uses intuitionistic rather than classical propositional calculus; for example, ¬¬φ need not be equivalent to φ.

- Modal logic has extra modal operators with informal meanings such as "it is necessary that φ" and "it is possible that φ".

- Infinitary logic allows infinitely long sentences. For example, one may allow a conjunction or disjunction of infinitely many formulas, or quantification over infinitely many variables. Infinitely long sentences arise in mathematics (e.g., topology) and metamathematics (e.g., model theory).

- First order logic with extra quantifiers has new quantifiers Qx,..., with meanings such as "there are many x such that ...". Also see branched quantification and the plural quantifiers of George Boolos and others.

- Independence-friendly logic is characterized by branching quantifiers, which allows to express independence between quantified variables.

Most of these logics are in some sense extensions of first order logic: they include all the quantifiers and logical operators of first order logic with the same meanings. Lindström showed first order logic has no extensions (other than itself) that satisfy both the compactness theorem and the downward Löwenheim-Skolem theorem. A precise statement of this theorem requires listing several pages of technical conditions that the logic is assumed to satisfy; for example, changing the symbols of a language should make no essential difference to which sentences are true.

First order logic in which no atomic sentence lies in the scope of more than three quantifiers, has the same expressive power as the relation algebra of Tarski and Givant (1987). They also show that FOL with a primitive ordered pair, and a relation algebra including the two ordered pair projection relations, are equivalent.

See also

- Gödel's incompleteness theorem

- Gödel's completeness theorem

- List of rules of inference

- Mathematical logic

- List of first-order theories

- Propositional logic

References

- David Hilbert and Wilhelm Ackermann (1928). Grundzüge der theoretischen Logik (Principles of Theoretical Logic). Springer-Verlag, ISBN 0-8218-2024-9.

- Article on classical logic by Stewart Shapiro at the Stanford Encyclopedia of Philosophy, which covers the definition, model theory and soundness and completeness results for first-order logic characterised in a natural deduction style.

- Introduction to mathematical logic by Karl Podnieks.

- Metamath: a project to construct mathematics using an axiomatic system based on propositional calculus, predicate calculus, and set theory